Defining high level requirements

Let us define some requirements at high level

- AWS Lambda that will take an input image from S3 and then send it to OpenAI vision model, get description of what is in the image.

- Entire infrastructure to be created on Cloud in automated way

- Every change is deployed in automated way.

Lets go !!

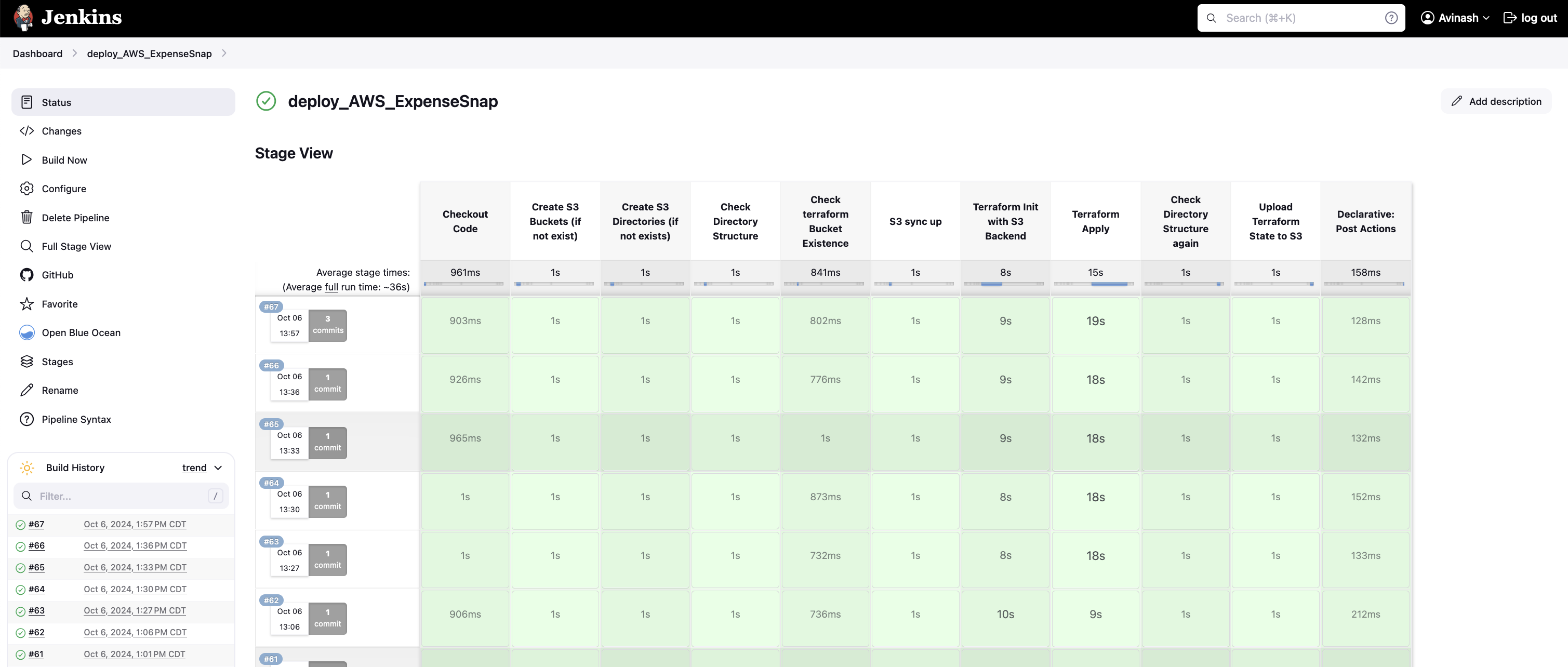

Jenkins

Setup AWS Credentials for our jenkins to be able to communicate with AWS and as well as Github token in order for it check out your latest code

We need to create a pipeline in Jenkins in a language called Groovy.

If you look at a sample Groovy script, this is very easy to read and understand.

I've added sample code from the pipeline in the image below.

pipeline {

agent any

environment {

AWS_ACCESS_KEY_ID = credentials('AWS_ACCESS_KEY_ID')

AWS_SECRET_ACCESS_KEY = credentials('AWS_SECRET_ACCESS_KEY')

S3_KEY = 'dev/terraform.tfstate'

S3_BUCKET_TERRAFORM = 'expensesnap-terraform-state'

S3_BUCKET_APP_CODE = 'expensesnap-app-code'

AWS_REGION = 'us-east-1'

PATH = "/opt/homebrew/bin:/usr/bin:/bin:/usr/sbin:/sbin:$PATH"

}

options {

ansiColor('xterm')

}

stages {

stage('Checkout Code') {

steps {

git branch: 'main', url: 'https://github.com/aviravipati/ExpenseSnap_backend.git'

}

}

stage('Create S3 Buckets (if not exist)') {

steps {

script {

sh """

# Check if S3 buckets exist, if not, create them

aws s3 ls s3://$S3_BUCKET_TERRAFORM || aws s3 mb s3://$S3_BUCKET_TERRAFORM --region $AWS_REGION

aws s3 ls s3://$S3_BUCKET_APP_CODE || aws s3 mb s3://$S3_BUCKET_APP_CODE --region $AWS_REGION

"""

}

}

}

stage('Check Directory Structure') {

steps {

sh 'ls -la'

sh 'ls -la app/lambda'

sh 'ls -la terraform'

}

}

}

}

post {

always {

cleanWs()

}

}

}

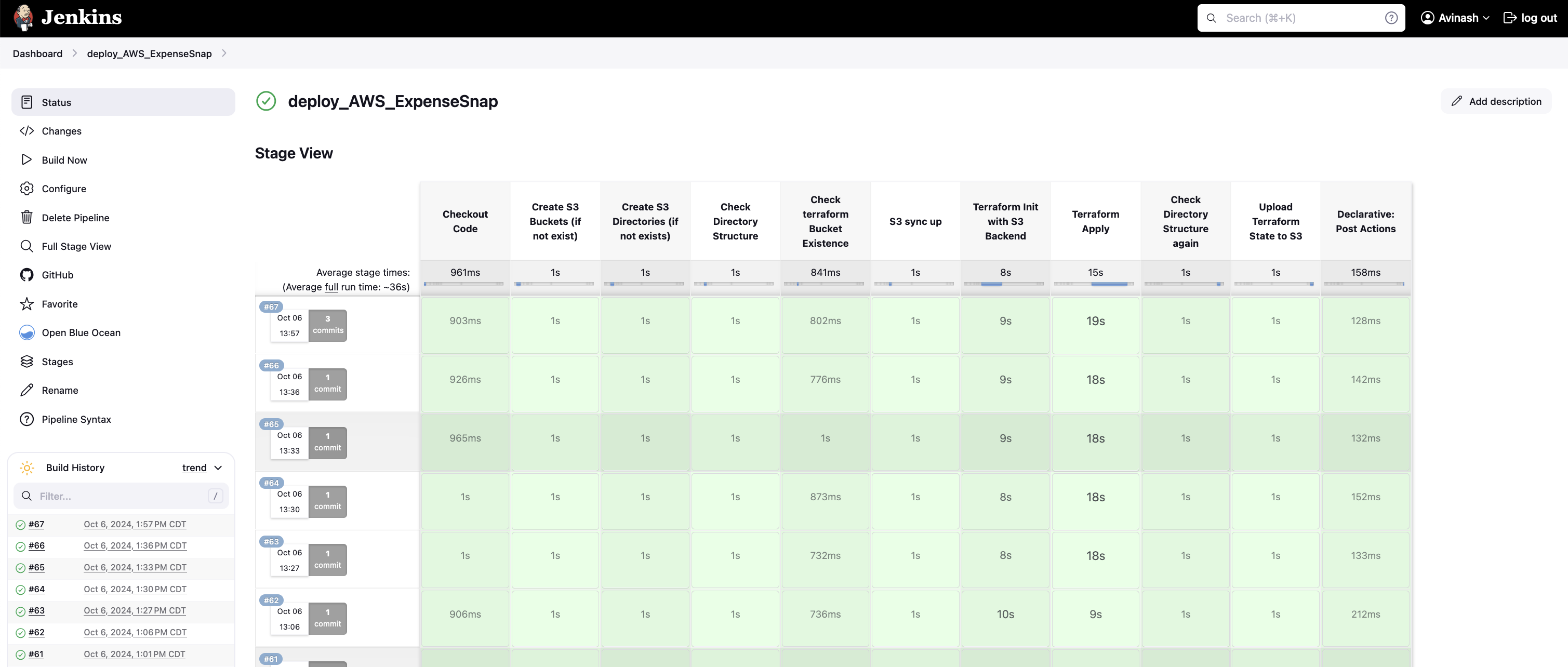

Pipeline with stages.

So each time you run this, it will pick up the latest code from Git and upload the Zip file to S3, which is then used as source code for Lambda

Also at the same time, create infrastructure needed.

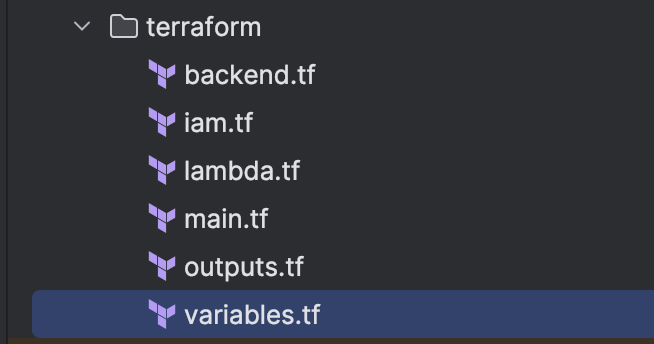

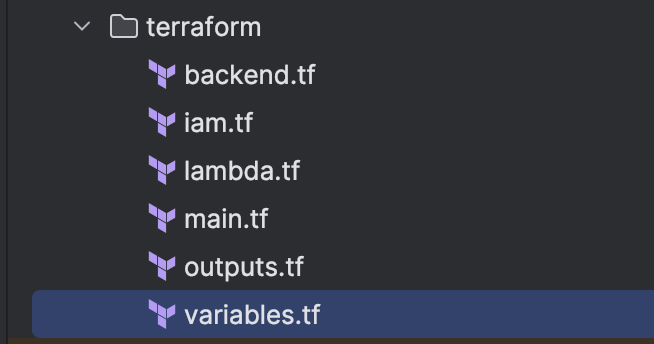

Terraform is a popular framework for creating infrastructure on the cloud, it has extended to most of the services across all cloud providers.

You will have no problem in creating/updating/destroying infrastructure using terraform.

The way terraform knows what to create/update/delete is based on a file called terraform.tfstate, which in our case will be saved to S3 as backend, so that it will be able to check against S3 file and save it back once done.

terraform init \

-backend-config="bucket=$S3_BUCKET_TERRAFORM" \

-backend-config="key=$S3_KEY" \

-backend-config="region=$AWS_REGION"

We are going to create few files below that are mostly refactored in a way for us to make changes easily.

Main focus areas are , iam and lambda

We create a role that our Lambda will assume (STS) and attach polices that will allow our Lambda to be able to get files from s3 , give only access to the bucket we need , permissions to write into cloud watch logs, as well as get secret (OpenAI) from secrets manager.

App Code

Our application code mainly consists of Lambda function (handler)

Lambda needs to get secret from secret manager for OpenAI API call.

Ability to generate presignged URL for our S3 file, so that we can share that securily with openAI

Get a JSON response of the description in the Image.

def describe_image(image_url, api_key):

"""Describe the image using OpenAI's GPT-4 Vision model."""

client = OpenAI(api_key=api_key)

try:

response = client.chat.completions.create(

model="gpt-4o",

messages=[

{

"role": "user",

"content": [

{"type": "text", "text": "Describe this image in detail."},

{

"type": "image_url",

"image_url": {

"url": image_url

}

}

]

}

],

max_tokens=300

)

return response.choices[0].message.content.strip()

except Exception as e:

print(f"Error calling OpenAI API: {e}")

return None

def handler(event, context):

# Get OpenAI API key from AWS Secrets Manager

api_key = get_secret("openAI_key")

if not api_key:

return {

'statusCode': 500,

'body': json.dumps('Failed to retrieve OpenAI API key')

}

# Generate presigned URL for the S3 object

bucket_name = "my.ios.s3uploads"

object_key = "photo_AB43147A-853A-4186-A4A0-D082256FADA1.jpg"

presigned_url = generate_presigned_url(bucket_name, object_key)

if not presigned_url:

return {

'statusCode': 500,

'body': json.dumps('Failed to generate presigned URL for S3 object')

}

# Describe the image

image_description = describe_image(presigned_url, api_key)

if not image_description:

return {

'statusCode': 500,

'body': json.dumps('Failed to describe image')

}

return {

'statusCode': 200,

'body': json.dumps({

'message': 'Image description generated successfully',

'description': image_description

})

}

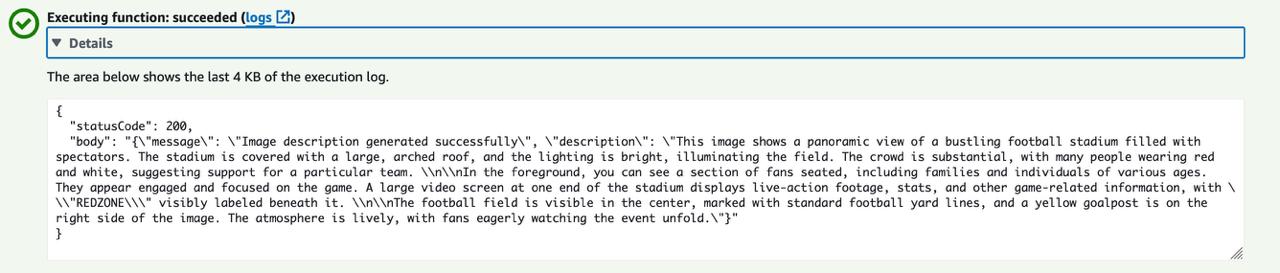

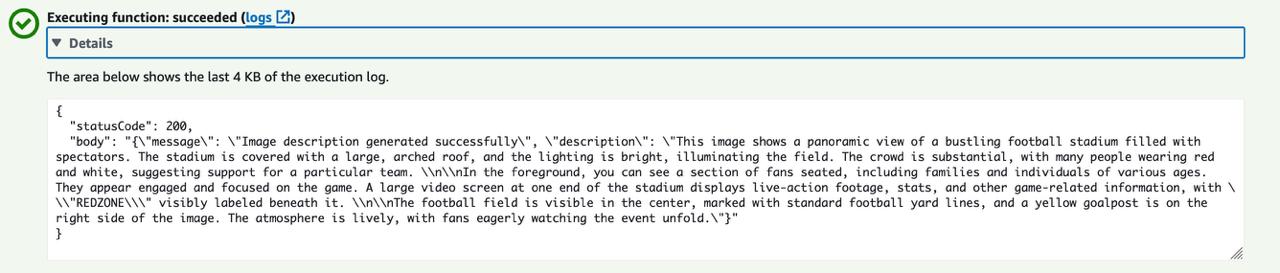

Voila, Response from Lambda