This page is a journal of my learning and development of the app.

I used Claude AI every step of the way to help me undestand topics, code and chatGPT for prototyping and logo generation.

Why?

Plant identifier is one of the top 100 grossing apps in the apple app store. Now I want to understand how it works and through this exercise we will try to build something similar.

What we need

- Data

- Model

- iOS App

- API

PlantNet is a plant identification dataset containing 300K images. As per the license we can use the data for commercial and non-commercial purposes. Now we have data, we need to find a model which can identify the plant in the image and provide the user with care instructions.

Never has been better time to build with AI

Let's answer some questions

-

Are there existing pre-trained models?

Yes, we can use pretrained CNN models like ResNet, VGG, or Inception

-

Can we include this model directly on our iOS app ?

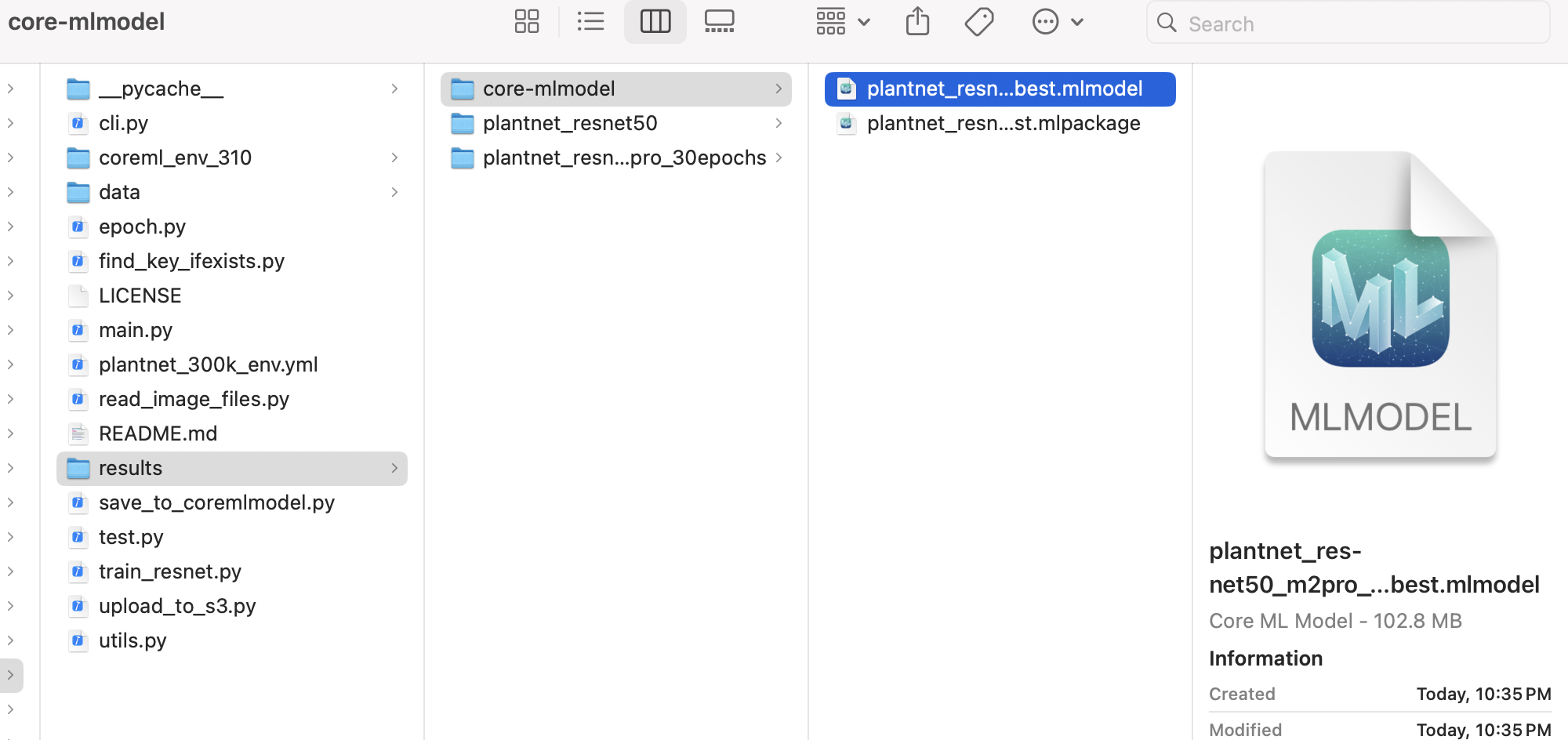

Initially I thought this is something viable based on my earlier knowledge of loading a model directly on to app - check out my repo here, where I used MobileNet model to classify images. The model was basic and thus it is not a big size to include part of your app. This seems still viable the model size may be big, around 100MB, but this is what we need, in order for our users to have instant results and offline identification.

This project is currently on hold due to unavailablity of good plant dataset. I'm not in favor of doing an API call to some external service, which would add lot of latency. We will resume again in few months and work on web scraping to acquire the data and re-train our models.